🥝KIWI: A Dataset of Knowledge-Intensive Writing Instructions for Answering Research Questions

Fangyuan Xu , Kyle Lo , Luca Soldaini , Bailey Kuehl , Eunsol Choi , David Wadden

About

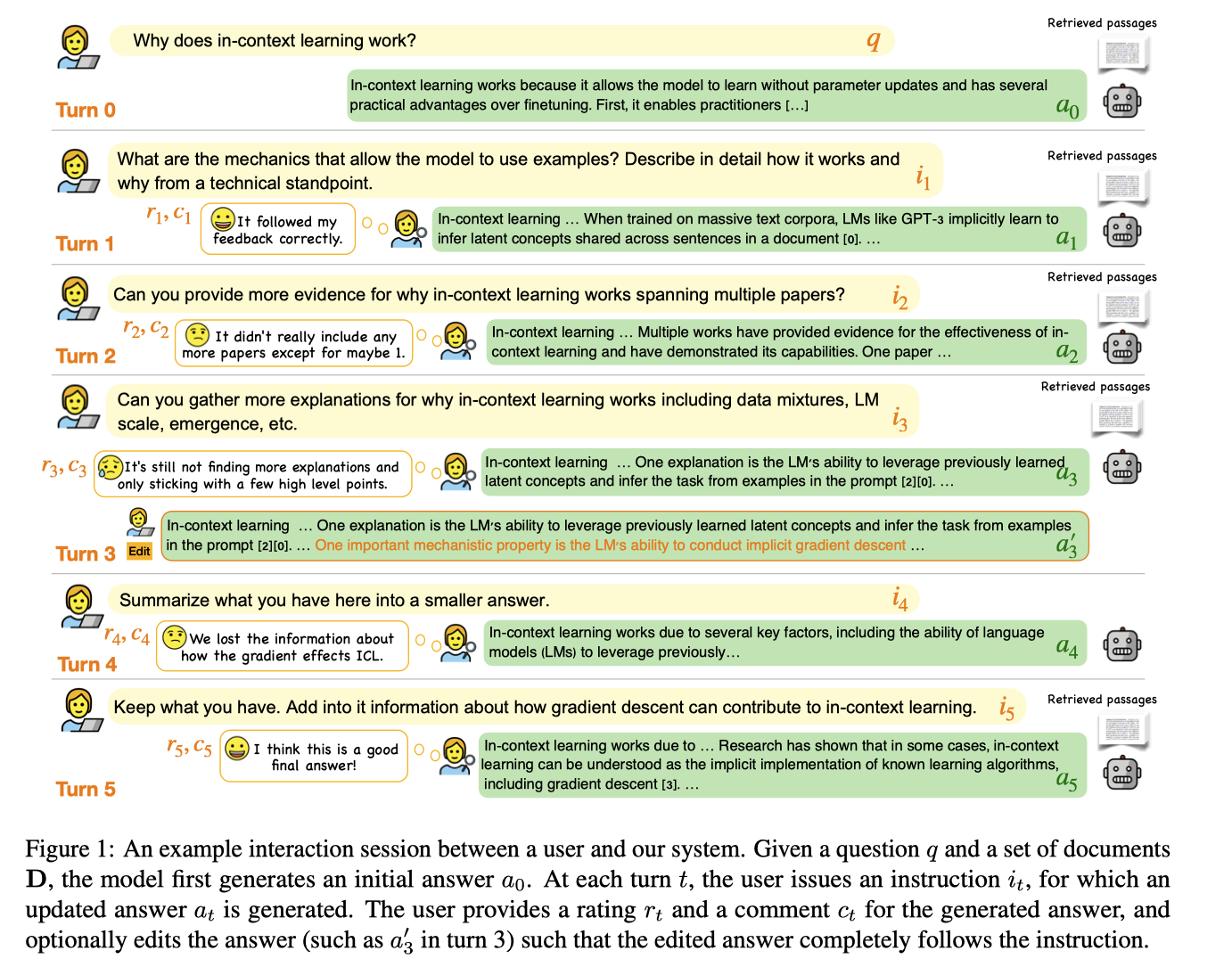

We introduce 🥝KIWI: a dataset with Knowledge-Intensive Writing Instructions for answering research questions. Given a research question, an initial model-generated answer and a set of relevant papers, an expert annotator iteratively issues instructions for the model to revise and improve its answer. We collect 1,260 interaction turns from 234 interaction sessions with three state-of-the-art LLMs (GPT-4, GPT-3.5-turbo, LLaMA-2-chat-70b). Each turn includes a user instruction, a model response, and a human evaluation of the model response. Through a detailed analysis of the collected responses, we find that all models struggle to incorporate new information into an existing answer, and to perform precise and unambiguous edits. Further, we find that models struggle to judge whether their outputs successfully followed user instructions, with accuracy at least 10 points short of human agreement. Our findings indicate that 🥝KIWI will be a valuable resource to measure progress and improve LLMs' instruction-following capabilities for knowledge intensive writing tasks.

Contact

For any questions, please contact Fangyuan Xu.