Qixing Huang

Learning 3D Foundation Models from Images

|

Souhail Hadgi, Luca Moschella, Andrea Santilli, Diego Gomez, Qixing Huang, Emanuele Rodolà, Simone Melzi, and Maks Ovsjanikov. Escaping Plato's Cave: Towards the Alignment of 3D and Text Latent Spaces. Computer Vision and Pattern Recognition (CVPR) 2025. |

|

Yuezhi Yang, Qimin Chen, Vladimir Kim, Siddhartha Chaudhuri, Qixing Huang, and Zhiqin Chen. GenVDM: Generating Vector Displacement Maps From a Single Image. Computer Vision and Pattern Recognition (CVPR) 2025. |

|

Hanwen Jiang, Zexiang Xu, Desai Xie, Ziwen Chen, Haian Jin, Fujun Luan, Zhixin Shu, Kai Zhang, Sai Bi, Xin Sun,Jiuxiang Gu, Qixing Huang, Georgios Pavlakos, and Hao Tan. MegaSynth: Scaling Up 3D Scene Reconstruction with Synthesized Data. Computer Vision and Pattern Recognition (CVPR) 2025. |

|

Hanwen Jiang, Haitao Yang, Qixing Huang and Georgios Pavlakos. Real3D: Scaling Up Large Reconstruction Models with Real-World Images. https://arxiv.org/abs/2406.08479 |

|

[Arxiv2024a] Zhengyi Zhao, Chen Song, Xiaodong Gu, Yuan Dong, Qi Zuo,Weihao Yuan, Zilong Dong, Liefeng Bo and Qixing Huang. An Optimization Framework to Enforce Multi-View Consistency for Texturing 3D Meshes Using Pre-Trained Text-to-Image Models. https://arxiv.org/abs/2403.15559 |

|

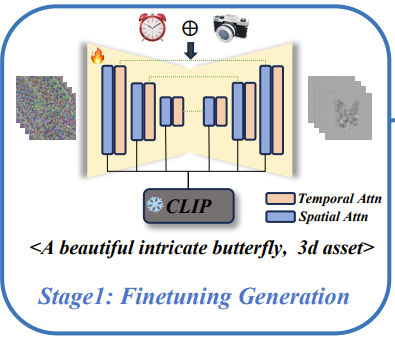

[Arxiv2024b] Qi Zuo, Xiaodong Gu, Lingteng Qiu, Yuan Dong, Zhengyi Zhao,Weihao Yuan, Rui Peng, Siyu Zhu, Zilong Dong,Liefeng Bo and Qixing Huang. VideoMV: Consistent Multi-View Generation Based on Large Video Generative Model. https://arxiv.org/abs/2403.12010 |

|

[ICLR24] Hanwen Jiang, Zhenyu Jiang, Yue Zhao and Qixing Huang. LEAP: Liberate Sparse-view 3D Modeling from Camera Poses. International Conferences on Learning Representations (ICLR) 2024 |

|

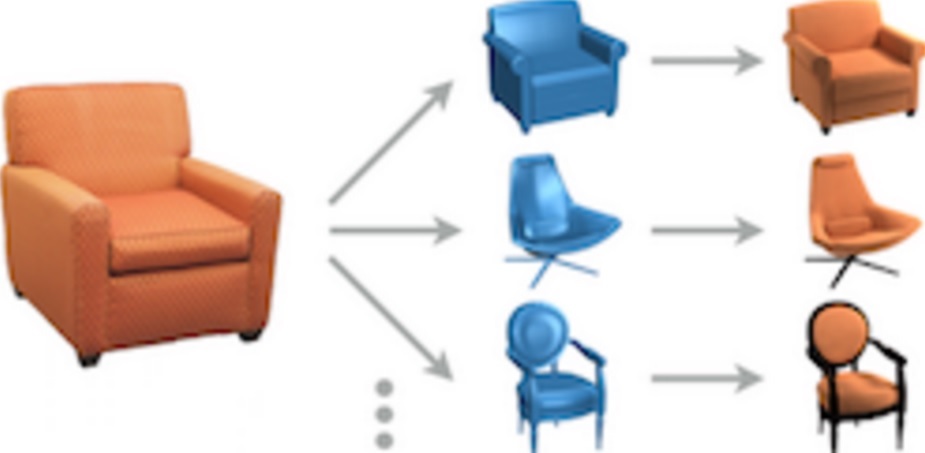

[SIGA16] Tuanfeng Wang, Hao Su, Qixing Huang, Jingwei Huang, Leonidas Guibas, and Niloy J. Mitra. Unsupervised Texture Transfer from Images to Model Collections. ACM Transaction on Graphics 35(6) (Proc. Siggraph Asia 2016). |