Qixing Huang

|

|

|

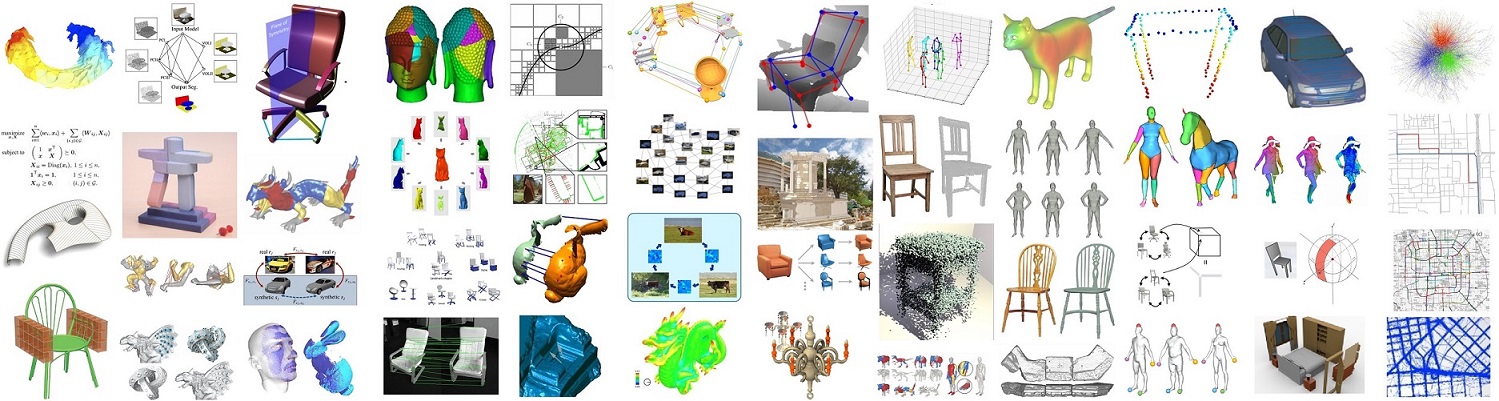

My research sits at the intersection of Computer Graphics, Computer Vision, and Machine Learning. My research focuses on both fundamental problems and practical applications in this domain. In particular, I explore 3D representations for applying machine learning on 3D geometric data. Instead of focusing on a single representation (e.g., point cloud or volumetric grid), my recent research aims at unlocking the potential of using hybrid 3D representations. I also study algorithms for analyzing and processing big 3D data. One central problem on this topic is how to build maps across a collection of objects, i.e., map synchronization. This problem also generalizes to learning neural networks jointly and is tied to hybrid 3D representations. My recent research topic is on learning foundation 3D generative models. A central topic is how to install regularization losses to preserve geometric, physical, and topological priors when performing 3D shape generation. Another central topic is how to learn 3D foundation models from both image/shape data, aiming to address the issue that we have very limited 3D data for training. On the algorithmic side, I develop and apply cutting-edge convex and non-convex optimization techniques for analyzing and processing 3D data. I also teach a graduate-level class on numerical optimization. On the practical side, I have been working on a variety of topics in 3D Vision, Geometry Processing (Shape Analysis, Geometric Modeling and Synthesis, Registration and Reconstruction, and 3D Printing), Image Understanding and Processing, and Geographical Information Systems. |