- size : big, medium, small

- color : red, blue, green

- shape : square, circle, triangle

For each of the following two different training sets, show the consistent hypothesis learned, if any, by the maximally specific conjunctive learning algorithm. If no consistent conjunctive hypothesis is learnable, clearly state this and explain why.

Training Set 1:

- < small, red, circle > : positive

- < small, red, triangle > : positive

- < small, green, circle > : negative

- < big, red, triangle > : negative

Training Set 2:

- < big, blue, circle > : positive

- < medium, blue, triangle > :positive

- < small, blue, square > : positive

- < small, blue, circle > : negative

- < big, green, square > : negative

What is the total number of possible binary functions over this instance space?

What is the total number of semantically distinct conjunctive hypotheses over this instance space (assuming negation is not allowed)?

- < big, blue. circle > : positive

- < medium, green, triangle > : positive

- < big, blue, square > : positive

- < small, blue, circle > : negative

- < big, green, square > : negative

Explicitly show the gain of each feature at each choice point. If there is a tie in gain, prefer the feature first in the list: (size, color, shape).

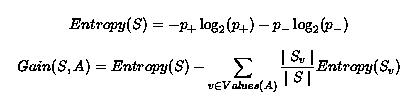

Below are the relevant formulae:

S -> NP VP, S -> VP, NP -> Adj NP, NP -> N, NP -> NP PP, VP -> V, VP -> V NP, VP -> VP PP PP -> Prep NP, Prep -> like, N -> guard, N -> gold, N -> tests, V -> guard, V -> tests, V -> like, Adj -> gold, Adj -> guard