Project 3

![[Logo]](sponge.jpg) |

Project 3: Menger Sponge

|

A reference implementation of the Menger sponge, including shading of cube and floor.

![[Solution]](spongesol.jpg) |

In this project, you will build a WebGL program that shows a procedurally-generated fractal, the Menger sponge, with user interaction using mouse and keyboard camera controls. You will also be introduced to texturing by writing a simple shader that draws a checkerboard pattern on an infinite, or seemingly infinite, ground plane.

Installing TypeScript

This project is written in TypeScript and will run in your browser. Before you can download and run the starter code, you will need to install the TypeScript compiler, and a minimalist HTTP server that you can run on your machine and that will serve up the Javascript to your browser.

- Install the NPM package manager. You can get it here.

- Install the TypeScript compiler tsc, and http-server. If NPM is properly installed, you should be able to do this installation using the following commands (might require an Administrator Mode command line on Windows):

npm install -g typescript

npm install -g http-server

If the installation is successful, you should be able to run tsc and http-server on your command line (both programs should have been added to your PATH).

Starter Code

As before, we have provided you with starter code, written in TypeScript and using WebGL as a starting point for implementing this project.

This code is a working example of how to set up WebGL and a shader program to draw a single red triangle on the screen. If you do not see a red triangle when you run the code, your dev environment is not set up correctly, please get help from me or the TA. We have also implemented routines to detect and respond to keyboard and mouse input. You will be hooking into this code to update the camera in real time.

Building the Starter Code

You will use the script make-menger.py to invoke the tsc compiler on the TypeScript source files in src/. This does some post-processing to combine the Javascript files with static files necessary for the web site to function. The complete web package is installed in dist/. Do not directly edit the files in dist/. All development should take place in the source files in src/.

Thus, to compile the code, simply run make-menger.py from the folder that contains it (the project root folder), and check that your updated file structure is correct.

Running the Starter Code

To run the starter code, first, you must launch the HTTP server. From the project root directory (containing dist/), run:

http-server dist -c-1

If successful, you should get a confirmation message that the server is now running. You can then launch the app by pointing your browser to http://127.0.0.1:8080.

Required Features (100 points)

If you look at the source code, you will find it is organized into the following sections:

Structure of the Starter Code (in App.ts)

Set up WebGL context

Create shader program

Compile shaders and attach to shader program

Link shader program

Load geometry to render

Create Vertex Array Objects and Vertex Buffer Objects

Create uniform (global) variables

while true do

Clear screen

Tell WebGL what shader program to use

Tell WebGL what to render

Render!

end while

Each of these steps is described below in more detail.

Set up WebGL context This boilerplate creates an WebGL context (window) and sets some of its properties. You can mostly ignore this section getting started.

Create and compile shader program A shader program is a GPU program for turning geometry into pixels. A more sophisticated example might use many shader programs: one to render glass, one to render rock, another for fire, etc. In this simple example, there is only one shader program that will be used to render all of the geometry in Shaders.ts. As mentioned in class, each shader programs contains several shaders that play different roles in the graphics pipeline. This assignment uses three shaders: a vertex shader and two fragment shaders, which are described below.

Link shader program gl.useProgram finalizes the shader program; after this point the shader program can be used in the rendering loop to do rendering. As mentioned above, it is also necessary to tell OpenGL what the location numbers are of the GLSL variables; gl.GetAttribLocation does this for the vertex position and normals.

Load geometry to render The geometry in this assignment is definied within MengerSponge.ts. The geometry is created using an array of vertex positions, and face indices (zero-indexed) into this list of vertices. The starter code is creating a single triangle with this information. You will be completely replacing this geometry with your own Menger sponge geometry. You will also need to define the vertex normals as you construct your sponge.

Create Vertex Array Objects and Vertex Buffer Objects The geometry loaded in the previous step is stored in system RAM. In order to render the vertices and triangles, that data must be bussed to the GPU. OpenGL/WebGL will handle this automatically, but you have to tell OpenGL/WebGL what and where the data is. A Vertex Array Object fulfills this purpose: it's a container that contains data that needs to be shared between the CPU and GPU. The starter code creates a VAO and fills it with three arrays (Vertex Buffer Objects): one for the list of vertices, one for the list of vertex normals, and one for the list of triangles.

There is another important function in this step. The GPU doesn't keep track of variables using names - all GPU variables are labeled with integers (called locations). When we write our setup code, we give a name to the array of vertex positions (in this case obj vertices), and in the vertex shader, we will also give a name to the position variable (vertex position). Part of compiling a shader is telling OpenGL/WebGL which variables (and which GLSL variables), correspond to which variable numbers on the GPU. gl.vertexAttribPointer tells WebGL that the VBO just created from obj vertices is some vertex attribute number. Another such call tells OpenGL that the VBO containing vertex normals is another attribute number. Later on we will tell OpenGL that vertex attribute for obj vertices should be associated with the GSLS variable vertex_position and that vertex attribute for normals should be associated with GLSL variable vertex_normal. In a more complicated example, we might have more VBOs, color, for example, and these would be another vertex attribute number.

Create uniform (global) variables Above we used VBOs to transfer data to the GPU. There is second way: you can specify uniform (global) variables that are sent to the GPU and can be used in any of the shaders. We are using uniforms to pass in lighting data and the MVP matrices. Like vertex attributes, these uniform variables are numbered. If we want to refer to the global variables from the code running on the CPU, we need to look up their numbers. For example, the vertex shader declares a light position uniform variables; perhaps it gets assigned to uniform variable number zero. The last glGetUniformLocation call looks up that number and stores it so that we can modify the variable from the TypeScript code later.

Clear screen We are now inside the render loop (draw). Everything above this point is run only once, and everything below is run every frame. The first thing the starter code does is clear the framebuffer. As mentioned in class, the framebuffer stores more data than just color, and this block of code clears both the color buffer (setting the whole screen to black) and depth buffer.

Tell OpenGL what shader program to use We only have one, so there is not much choice. Later you will write a second shader program for rendering the floor.

Tell OpenGL what to render During initialization we set up some VBOs and uniform variables. Here we tell the GPU which VAO the shader program will use, then send the data in the VBOs (the vertices and faces) to the GPU. Hooking up the wrong VAOs/VBOs with the wrong shader program is a classic source of silent failure to render the right geometry.

Render! gl.drawElements runs our shader program and rasterizes one frame into the framebuffer. You will also need to do the necessary set up to draw in a floor as part of this assignment.

To complete this assignment, you do not have to understand all of the above; there are only a few steps that need to be changed to render the floor and cube. But you should familiarize yourself with how the example program is structured, as future projects will build on this skeleton.

Understanding the GLSL Shader

The example shader program contains three shaders: a vertex shader and two fragment shaders. Please refer to the lecture slides for information on how these shaders are related to each other in the graphics pipeline.

The vertex shader is executed separately on each vertex to be rendered, and its main role is to transform vertex positions before further processing. It takes as input the vertex's position (which, as explained above, is passed in from the C++ array of vertex positions) and writes to gl_Position, a built-in variable that stores the transformed position of the vertex. This is the position that will be passed on to the rest of the rendering pipeline. Right now the shader simply converts vertex positions to clipping coordinates. It also computes the direction from the vertex to the light in camera coordinates and transforms the vertex normal to camera coordinates. These are used by the fragment shader to do shading. There is no need to transform these beyond camera coordinates (so the projection matrix isn't used on them) since the shading can be done in camera coordinates. You won't need to modify this shader in this assignment.

The fragment shader is called once per pixel during rasterization of each triangle. It diffuse-shades each triangle, so that faces that point towards the light are brighter than faces at an angle to the light. This code uses the normals previously computed and passed through the pipeline to it. We have provided a skeleton for both the cube and the ground plane.

Creating the Menger Sponge

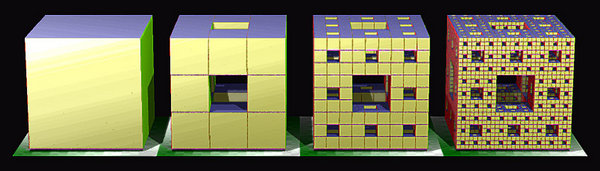

The Menger sponge, for L = 0 to 3 (left to right).

Notice the fractal nature of the sponge.

|

Your first task in this assignment is to procedurally generate the Menger sponge illustrated above. The sponge is an example of a fractal -- its geometry is self-similar across scales, so that if you zoom in on one part of the sponge, it looks the same as the whole sponge. The full sponge is thus infinitely detailed, and cannot be rendered or manufactured; however, better and better approximations of the sponge can be created using a recursive algorithm. Let L be the nesting level of the sponge; as L increases, the sponge becomes more detailed. Given L, the algorithm for building the L-level sponge is listed below.

Sponge generation code

Start with a single cube

for i = 0 to L do

for each cube do

Subdivide cube into 27 subcubes of one third the side length

Delete the 7 inner subcubes (see figure, second from the left).

end for

end for

Notice that for L = 0, the Menger sponge is simply a cube. You will write code to generate the Menger sponge for 0 ≤ L and modify the keyboard callback so that pressing any key from '1' to '4' draws the appropriate sponge on the screen. All sponges should fill a bounding box whose diametrically-opposite corners are (m,m,m) and (M,M,M), where m = -0.5 and M = 0.5.

- Build out a Menger sponge based on its nesting level. The sponge's vertices, indices, and normals should properly position and scale its subcubes as described in pseudocode. You need to ensure that cube faces (consisting of two triangles) have outward-pointing normals. This means that for every triangle with vertices vi,vj,vk, the triangle normal (vj-vi) x (vk-vi) must point *away* from the cube. Incorrectly-oriented triangles can be fixed by permuting the order of the triangle's vertex indices. If triangles are not oriented correctly, they will appear incorrectly shaded (probably black) when rendered, because they are "inside out."

- Ensure that the keyboard callbacks in Gui.ts from '1' to '4' generates and displays a Menger sponge of the appropriate level L. The geometry should only be recreated when one of these keys is pressed -- do not procedurally generate the cube every frame! (Hint: any time you change the vertex or triangle list, you need to inform WebGL about the new data by binding the vertex and triangle VBOs using gl.bindBuffer, passing the new data to the GPU using gl.bufferData, etc.) The skeleton code will do most of this for you, if you slot in your Menger-generation code in the right place.

Camera Controls

Next, you will implement a perspective camera, and keyboard and mouse controls that let you navigate around the world. This will be done in Gui.ts. As discussed in class, the major pieces needed for setting up a camera are 1) a view matrix, which transforms from world coordinates to camera coordinates; as the camera moves around the world, the view matrix changes; 2) a perspective matrix, which transforms from 3D camera coordinates to 2D screen coordinates using a perspective projection, and 3) logic to move the camera in response to user input.

The camera's position and orientation is encoded using a set of points and vectors in world space:

- the eye, or position of the camera in space;

- the look direction that the camera is pointing;

- the look direction is not enough to specify the orientation of the camera completely, since the camera is still free to spin about the look axis. The up vector pins down this rotation by specifying the direction that should correspond to "up" in screen coordinate (negative y direction);

- the tangent or "right" direction of the camera. Notice that this direction is completely determined by the cross product of the look and up directions;

- the camera_distance, the distance from the camera to the point that the camera is focusing on; this distance controls the camera zoom;

- the center point, the point on which the camera is focusing. Notice that center = eye + camera_distance * look.

The following default values should be used to initialize the camera at program start. It will ensure that the Menger sponge begins within the camera's field of view:

- eye = (0,0,camera_distance);

- look = (0,0,-1);

- up = (0,1,0).

The other default values can be computed from these.

The camera coordinate system (left) and its relationship to screen coordinates (right).

The camera's orientation is represented as an orthonormal frame of three orthogonal unit vectors. Note that the origin of the screen's coordinate system is the top left

![[Camera]](camera.jpg) |

You will implement a first-person shooter (FPS) camera, where the center moves based on mouse input. You do not need to implement orbital controls but you are welcome to if you'd like. The start code already includes a keyboard callback which updates the global boolean fps_mode whenever the key is pressed if you do want to create an orbital mode.

- Implement camera rotation when left-clicking the mouse and dragging. The starter contains a mouse callback that tracks the mouse position during dragging, and records the mouse drag direction in screen coordinates in the vector mouse_direction. Calculate a corresponding vector in world coordinates. This vector can be constructed by applying the distance moved in screen coordinates along the x and y axes to the right and up vectors (essentially the x and y axes, since the camera is looking down the z axis). The camera should swivel in the direction of dragging, i.e. it should rotate about an axis perpendicular to this vector and to the look direction. Rotate by an angle of rotation_speed radians each frame that the mouse is dragged. You can use the rotate functions in the Camera.ts library in webglutils.

- Implement a zoom function when right-clicking and dragging the mouse. Dragging the mouse vertically upward should zoom in (decrease the camera_distance) by zoom_speed each frame that the mouse is dragged, and dragging downward should zoom out by the same speed. You are encouraged, but not required, to fix the classic bug where zooming in too far inverts the world.

- Implement the behavior of the 'w' and 's' keys. In FPS mode, holding down the 'w' or 's' key should translate both the eye and center by ±zoom_speed times the look direction.

- Implement the behavior of holding down the 'a' and 'd' keys. In FPS mode, the keys should strafe, i.e. translate both the eye and center by ±zoom_speed times the right direction.

- Pressing the left and right arrow keys should roll the camera: spin it counterclockwise or clockwise (respectively) by roll_speed radians per frame.

- Holding down the up or down arrow keys should translate the camera position (in FPS mode), as with the 'a' and 'd' keys, but this time in the ±up direction (with the translation speed being again pan_speed units per frame).

- (Extra Credit) Implement Save functionality using Control-S to save current geometry to an OBJ file.

As implementing camera controls can be subtle and it is easy to apply the wrong rotation, transform using the wrong coordinates, etc, make regular use of the reference executable to verify that your camera behavior matches.

Some Shader Effects

Finally, you will do some shader programming to liven up the rendering of the cube and place a ground plane on the screen so that the user has a point of reference when moving around the world. These GLSL programs should be placed in Shaders.ts.

- Color the different faces of the cube based on what direction the face normal is pointing in world coordinates. Notice that the face normals will be axis-aligned no matter how the user moves or spins the camera, since transforming the camera does not move the cube in world coordinates. Refer to the following table for how to color the faces:

| Normal | | Color |

| (1,0,0) | | red |

| (0,1,0) | | green |

| (0,0,1) | | blue |

| (-1,0,0) | | red |

| (0,-1,0) | | green |

| (0,0,-1) | | blue |

All coloring code should take place in the fragment shader. The above colors are the base colors; leave intact the shading of the faces due to orientation of the face relative to the light source.

- Add a ground plane to the scene. This plane should be placed at y = -2.0 and extend either very far or infinitely in the x and z directions. Rendering of this plane should be completely separate from that of the cube: create new lists of vertices and triangles for the plane, new VBOs for sending this geometry to the GPU, and a new shader program for rendering the plane. This shader program can reuse the cube's vertex shader, but should have its own fragment shader.

Ideally you should be able to represent the geometry of the infinite plane using only a small (no more than 4) number of triangles (hint: think in homogeneous coordinates), but you can also just make a very large plane in world space if you so choose.

-

Write a fragment shader for the ground plane, so that the plane is colored using a checkerboard pattern. The checkerboard should be aligned to the x and z axes (in world coordinates), and each grid cell should have size 5.0 x 5.0. The cell base color should alternate white and black (again, these are base colors, and you should reuse the cube shader's shading code to dim pixels facing away from the light).

There are many ways of coding the checkerboard pattern; use any method you like. You may find the following GLSL functions useful: mod, clamp, floor, sin. Hint: the fragment shader, by default, does not have access to the position of pixels in world coordinates (since that information was lost earlier in the pipeline when the vertex positions are multiplied by the view and perspective matrices). You may find it useful to pass the untransformed world coordinates to the fragment shader.

A3 Milestone

For your A3 milestone, submit a Gitlab link to the project on a branch called

a3-milestone. This should include the stable code you've written so far along with progress report as a .pdf document stating what has been accomplished (and by whom if working with a partner), and what the plan is for completing the assignment on time. Include some image artifacts of what's been implemented as well.

As a guideline of what we're expecting, you should have all levels of the cube completed. You should also be making good progress into either the camera controls, the shaders, or a little progress into both.

In terms of your plan, please try to be specific -- this document is also intended to help you think through what needs to be done and what a reasonable timeline should be. Therefore, you should include:

-

A rough timeline of what you expect to complete the specific, required features

-

A description of the algorithm you'll be using to implement each of the specific, required features

-

Notes about any unknown questions and/or known issues that might slow down your progress implementing any of the required features

Submission Instructions

Submit your project via GitLab and provide a link to it via Canvas. Make sure to keep your repository private and give access to me (thesharkcs) and the TA (TA GitLab usernames are posted to Announcements on Canvas). We will be grading based on a branch called code-freeze, so please make sure you have a working final version of the project on that branch. If commits are made after the deadline, we will treat that as a late submission, and we will deduct late slips accordingly and/or apply the late submission penalty as per the syllabus.

In addition to your complete source code, the project should include:

- A README file including your name (and your partner's name), and if you made any modifications to the included libraries/build script.

- A report explaining how you implemented the cube, the shaders, and the controls, as well as several screenshots of the final product. Please also include a section on issues you encountered, known bugs, and future work. This will help us understand where you ran into issues and better assess your work, even if we encounter issues while grading.

Grading

You'll be graded on how well you met the requirements of the assignment. D quality or below work has few or none of the features implemented/working. C quality work has implemented some or most of the features, but the features are built in a way that is not fully working and/or the logic is not well-considered. B quality work has implemented most or all of the features in a way that works but may have some issues and/or the logic is not fully considered. A quality work has implemented all of the features in robust, polished way that demonstrates your understanding of the assignment, the math, and the provided code base. Note that a well-written report can help us understand your thought process, which is a large part of what we are grading you on in these assignments.

Reminder about Collaboration Policy and Academic Honesty

You are free to talk to other students about the algorithms and theory involved in this assignment, or to get help debugging, but all code you submit (except for the starter code, and the external libraries included in the starter code) must be your own. Please see the syllabus for the complete collaboration policy.

Last modified: 2/16/24

by Sarah Abraham

theshark@cs.utexas.edu

![[Solution]](spongesol.jpg)

![[Camera]](camera.jpg)