UT Austin Villa

RoboCup@Home DSPL Team

Professors Peter Stone, Andrea Thomaz, Scott Niekum, Luis Sentis, Raymond J. Mooney, and Justin W. Hart

The University of Texas at Austin

Description of the approach and information on scientific achievements

Profs. Stone, Thomaz, and Niekum aim to combine their expertise in reinforcement learning, human-robot interaction, and learning from demonstration towards a full solution to the RoboCup@Home challenge. We propose to bring together postdocs, graduate students, and undergraduate students from each of our groups to enable the HSR robot to interact seemlessly with people in the environment, learn behaviors ranging from object manipulation to robust environmental perception, to high-level task sequencing. Our development efforts will be situated within our ongoing Building-Wide Intelligence project, which already includes several human-interactive robots, and which has the long-term objective of enabling a team of robots to achieve long-term autonomy within the rich socially interactive environment of the UT Austin Computer Science building. The HSR robot will thus be embedded within a multi-robot system of heterogeneous robots while its novel software is under development at UT Austin (though of course during the competition it will act fully independently).

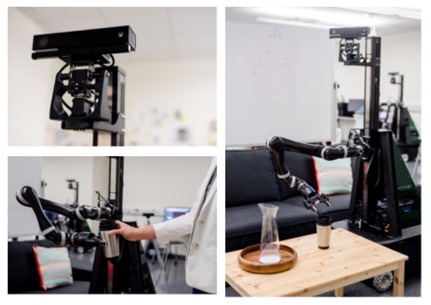

Profs. Thomaz and Niekum have performed much of their past research in home-like environments and have an existing shared lab space set up as an apartment with a living room, dining room, and kitchen. This lab space is in the same building as the Building-Wide Intelligence, and will therefore allow us to easily develop and test the robot in both an open, fully interactive setting and a more controlled home-like setting.

We expect the HSR robot to enhance our overall system's breach of capabilities and thus quickly play an important role in novel research on topics ranging from activity recognition, to robust perception, to learned planning and navigation, to general human-robot interaction. Throughout our development, we will continue our strong tradition of releasing well-documented, self-contained behavior modules for components of autonomous robots that can be used by other research groups to enhance their own research.

The team leaders' past scientific achievements are chronicled on their respective websites:

Relevant publications

- UT Austin Villa's RoboCup-related papers (from Prof. Stone's group).

- Relevant publications from the Building-Wide Intelligence (BWI) project.

- Prof. Niekum's relevant publications on manipulation and learning from demonstration

- S. Niekum, S. Osentoski, C.G. Atkeson, and A.G. Barto.

Online Bayesian Changepoint Detection for Articulated Motion Models.

IEEE International Conference on Robotics and Automation (ICRA), May 2015. - K. Hausman, S. Niekum, S. Osentoski, and G. Sukhatme.

Active Articulation Model Estimation through Interactive Perception.

IEEE International Conference on Robotics and Automation (ICRA), May 2015. - S. Niekum, S. Osentoski, G.D. Konidaris, S. Chitta, B. Marthi, and A.G. Barto.

Learning Grounded Finite-State Representations from Unstructured Demonstrations.

International Journal of Robotics Research (IJRR), Vol. 34(2), pages 131-157, February 2015. - S. Niekum, S. Osentoski, S. Chitta, B. Marthi, and Andrew G. Barto.

Incremental Semantically Grounded Learning from Demonstration.

Robotics: Science and Systems 9 (RSS), June 2013. - S. Niekum, S. Osentoski, G.D. Konidaris, and Andrew G. Barto.

Learning and Generalization of Complex Tasks from Unstructured Demonstrations.

IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 5239-5246, October 2012.

- S. Niekum, S. Osentoski, C.G. Atkeson, and A.G. Barto.

- Prof. Thomaz' relevant publications on human-robot interaction.

- V Chu, B Akgun, AL Thomaz.

Learning haptic affordances from demonstration and human-guided exploration

IEEE Haptics Symposium (HAPTICS), 2016 - Crystal Chao, Andrea Thomaz.

Timed Petri nets for fluent turn-taking over multimodal interaction resources in human-robot collaboration.

IJRR 35(11): 1330-1353 (2016) - B Akgun, A Thomaz

Simultaneously learning actions and goals from demonstration

Autonomous Robots, 2016 - B Akgun, AL Thomaz

Self-improvement of learned action models with learned goal models

IEEE/RSJ International Conference on Robots and Systems (IROS), 2015 - V Chu, T Fitzgerald, AL Thomaz

Learning Object Affordances by Leveraging the Combination of Human-Guidance and Self-Exploration

The Eleventh ACM/IEEE International Conference on Human Robot Interaction, 2015 - Sonia Chernova and Andrea L. Thomaz.

Robot learning from human teachers.

Synthesis Lectures on Artificial Intelligence and Machine Learning, 8(3):1 121, 2014.

- V Chu, B Akgun, AL Thomaz.

Relevant photos/videos

- Our qualification video and the all unedited clips

- Videos from our past participation in RoboCup@Home are available

at the bottom of our RoboCup@Home

page.

- A narrated video was presented at ICRA 2008.

- There are many other videos from our compeitions available on the UT Austin Villa competitions page.

- There are several research videos associated with papers relevant to our RoboCup team

- There are several research videos associated with papers relevant to the BWI project

- Videos of the BWI project at outreach events

- Video of Prof. Niekum's research on autonomous IKEA furniture assembly

- TEDx overview of Prof. Thomaz' research on socially collaborative robots

- Prof. Thomaz' research on Embodied Active Learning Queries

- Prof. Thomaz' research on Multimodal turn-taking collaborations

Software

During our participation in the RoboCup@Home SPL, we are fully committed to continuing our strong tradition of contributing open source code to the community.

- Our UT Austin Villa RoboCup 3D Simulation Base Code Release won 2nd prize in the 2016 Harting Open Source Competition. It is the basis for our team that has won 5 of the 6 competitions.

- Our Soccer SPL source code release, which formed the core of our 2012 SPL championship, has been widely used in the league.

- Our BWI code repository provides an open source suite of ROS packages, fully integrated into an architecture for service robots that operate in dynamic and unstructured human-inhabited environments. It has been built on top of the Robot Operating System (ROS) middleware framework. This software architecture provides a hierarchical layered approach for controlling autonomous robots, where layers in the hierarchy provide different granularities of control. Specifically, this architecture includes navigation software that allows a mobile robot to move autonomous inside a building, while being able to switch 2D navigational maps when using the elevator to move to a different floor. A symbolic navigation module is built on top of this autonomous navigation module which allows the robot to navigate to prespecified doors, rooms, and objects in the environment. From a high-level perspective, the software architecture includes planning and reasoning modules that allow the robot to execute high level tasks, such as delivering an object from one part of the building to another, using a complex sequence of symbolic actions.

- Our TEXPLORE code provides an open-source package for reinforcement learning on real robots.

- Our ar_track_alvar ROS package has become a community standard for tag-based perception.

- Our ROS implementation of Dynamic Movement Primitives has become a popular tool for learning from demonstration.

- We have made research code available for:

Team Roster

- Justin Hart, Postdoctoral fellow with the Learning Agents Reserach Group

- Jivko Sinapov, Post-doctoral fellow with the Learning Agents Research Group

- Rolando Fernadez, Masters student

- Nick Walker, undgergraduate student

Additionally, we will be recruiting students from the Freshman Research Initiative Autonomous Intelligent Robotics stream. We expect to add five additional students for the summer in this way.

Previous participation in RoboCup

- UT Austin Villa has participated successfully in past

RoboCup competitions, every year since 2003.

- In 2012, we finished 1st plance in the soccer SPL,

- in 2016, we finished 2nd place in the soccer SPL,

- and also in 2016, we finished 1st place in the 3D simulation league for the 5th time in 6 years.

- We finished 2nd place in RoboCup@Home at RoboCup 2007.