In order for a robot to be able to navigate the world, it must be able to “see” its environment and be able to process what it sees. However, since computers don’t naturally know how to understand images, this is a task easier said than done. Computer vision researchers are up to the task of training these programs.

Computer science professor Kristen Grauman researches computer vision, a field of computer science that deals with machine learning and image processing with the goal of having artificially-intelligent systems “understand” images and video.

“My domain is vision, but nearly everything we do to tackle the problems involves machine learning as well,” Grauman said.

Her work also touches on robotics, since she also researches embodied vision, which takes into account the actions of the agent - human or robotic - that is seeing the environment. By incorporating action into computer vision, computers can solve problems in dynamic, changing environments instead of with just static images.

“These are problems in computer vision where you aren’t just thinking about analyzing static photos or videos that were already captured by some human photographer,” Grauman said. “Instead, you have an agent that can control its own camera, that can have multi-sensory input like sound and motion, or maybe feel and touch as well as sight, and must both learn and act in an ongoing, continual, long-term way with this information.”

According to Grauman, these systems in computer vision are increasingly using reinforcement learning, where the agent is rewarded for correct behavior over time, instead of supervised learning, where the system is taught patterns through labeled images.

For example, reinforcement learning has been used in her work to help systems make inferences from initial observations and decide what to look for next. The common approach of training programs on pre-captured, labeled photographs offers an incomplete view of the situation. In contrast, Grauman’s research group has investigated how to train intelligent computer vision systems by having it scan a scene or manipulate a 3D object in order to inspect it; this allows the agent to view an environment efficiently and make decisions about where to look.

Although scientists don’t completely understand how human sight works, many computer vision researchers, such as Grauman, find parallels in human neuroscience and how these computer vision systems are set up. For example, with embodied vision, cognitive science studies have shown that vision development in animals is influenced by body motion.

Training computers to understand images and videos in place of humans, however, is critical because of the sheer scale of media that needs to be viewed. For example, it would take more than 16 years for someone to watch all of the new content uploaded to YouTube in one day.

“There’s just the volume of data versus time, but there’s also discovery and mining for things that, even if you had the time and human attention to deal with, you can’t scale,” Grauman said. “Machines are much better at discovering these things, given massive amounts of data, than you can expect humans to be.”

Systems that can monitor videos quickly and at the scale of social media can help, for example, in monitoring Facebook for people who are depressed or in danger, she said. This research could also lead to systems that can summarize video visually by finding the important parts of long videos.

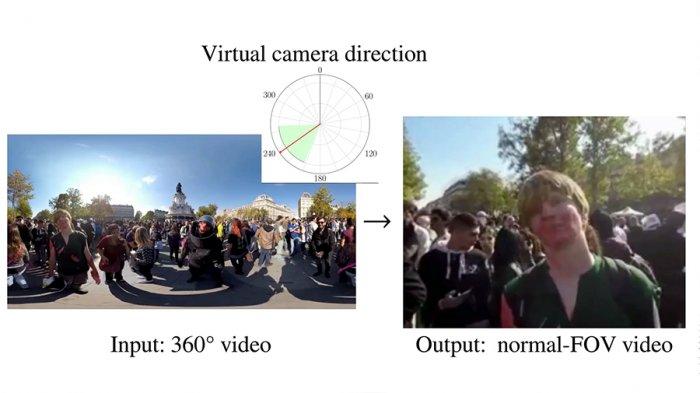

Grauman’s research group has also done a lot of work with 360-degree videos. A recent project they’ve worked on, AutoCam, solves the problem of where to look while viewing a 360-degree video. In such a video, which can often be viewed either with a virtual reality headset or by moving controls on a flat display like a computer, there is content in every direction. However, that makes it easy to for a viewer to miss what’s important, especially if something is happening behind the current field of view. For AutoCam, the research group trained a program to move the camera angle automatically through the 360-degree environment, capturing the most important information. This results in a guided experience through the video in a normal field of view without having to turn around with a VR headset or using computer controls.

“It’s discovering where to look over time in a video that has omnidirectional content,” Grauman said.

The system was trained by processing lots of unlabeled YouTube videos so that it learned what properties are common to human-filmed videos, such as what kind of content the camera focuses on and the composition of the shots. When used on a 360-degree video, the program divides it into all possible viewing angles for each chunk of time and picks the pieces that best match these properties.

“Now, you could optimize for a path through those glimpses that tries to keep the most human-captured looking parts together so you get a smooth camera motion that also tries to frame the shot the way a human photographer would,” Grauman said. “It’s essentially learning to record its own video by observing how people capture videos.”

The applications of this research goes back to embodied vision and robots: when moving around in an environment, autonomous robots would have to know in what direction they should direct their camera to complete their task, Grauman said.

“Any real-world application involving a mobile robot, the robot has to make decisions about where to go and how to turn the camera,” she said. “Any real-world application of an autonomous robot, such as a search-and-rescue robot that’s going around the rubble of some natural disaster, there’s no pre-prescribed program you could hand it to say, here’s where you need to move. It has to actually explore on its own and, based on what it sees and what it hears, make intelligent judgement about where to go next or how to move the camera.”

Most recently, Grauman and graduate student Ruohan Guo worked on a project involving audio source separation. They trained a system to learn what kinds of sounds different objects, such as instruments, make and how to automatically separate the sounds.

Grauman said her research group will be working on problems in embodied vision for a while.

“I think that the embodied vision is a big ‘what’s next’ for the field,” she said. “There’s room to now take this bigger venture, so this will be next for a while, there’s a lot we need to do here.”