In mathematical optimization, a new approach is emerging, promising to transform how we tackle intricate challenges across various domains. Consider the complexity of bilevel optimization, a problem that has confounded experts in machine learning, engineering, and other fields. Recent advances are providing new insights into this intricate landscape, presenting a streamlined technique that has the potential to significantly enhance our ability to navigate these complex problems.

UT Computer Science researchers have pioneered a technique known as Bilevel Optimization Made Easy (BOME) presented in NeurIPS 2022, which presents a more efficient way to address bilevel optimization problems. BOME deviates from conventional methods, providing a better fit for deep learning applications and offering increased flexibility in managing the constraints of lower-level problems.

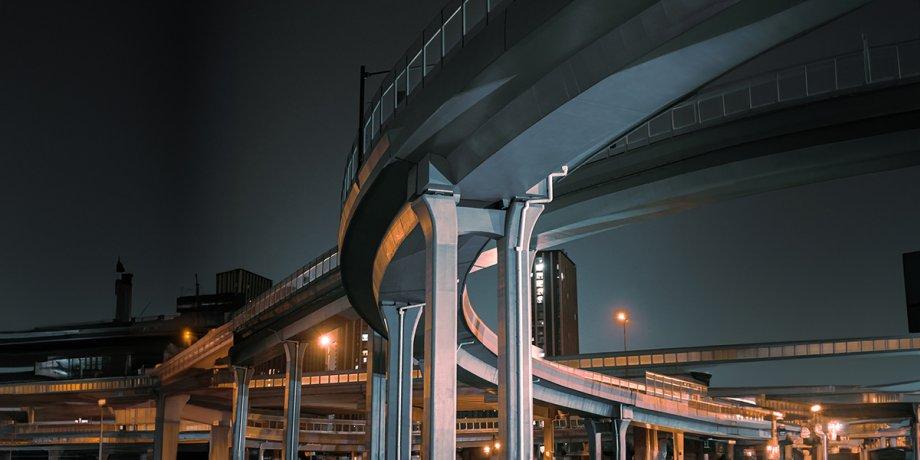

To truly grasp the significance of BOME, it's essential to understand the intricacies of bilevel optimization. Imagine being tasked with optimizing the placement of new roads in a city. Each potential road configuration gives rise to a nested optimization problem: determining how traffic would flow on these new roads. This introduces two levels of optimization - high-level road placement and low-level traffic optimization.

Traditional approaches, like gradient descent, often struggle to efficiently manage the interplay between these two levels, leading to computational challenges. This is where BOME steps in.

“When there are two levels, the gradients can get quite difficult to compute,” says UT Professor Peter Stone. “BOME is a method to do so more efficiently.”

Researchers Bo Liu, Mao Ye and Professors Qiang Liu and Peter Stone from UT Austin, and Prof. Stephen J Wright from UW-Madison developed BOME as a method to handle such complexity more effectively. The core principle revolves around leveraging first-order optimization techniques, simplifying the process and facilitating smoother computation of gradients in scenarios involving multiple levels of optimization. This newfound efficiency positions BOME as an appealing solution, particularly within the realm of hyperparameter optimization. In this context, each machine learning program represents an optimization problem, and finding the optimal program necessitates solving numerous such problems.

As the field of AI witnesses the emergence of larger and more potent models, efficient and scalable bilevel optimization algorithms become increasingly needed. “BOME, as a simple and scalable bilevel optimization algorithm that solely relies on first order information, allows us to push the frontiers of an array of key challenges in machine learning, including hyperparameter optimization and multi-level decision making,” said UT Associate Professor Qiang Liu.