Subsection 4.2.1 The four fundamental spaces of a matrix

¶We assume that the reader remembers theory related to (vector) subspaces. If a review is in order, we suggest Weeks 9 and 10 of Linear Algebra: Foundations to Frontiers (LAFF) [26].

At some point in your linear algebra education, you should also have learned about the four fundamental spaces of a matrix \(A \in \C^{m \times n}\) (although perhaps only for the real-valued case):

-

The column space, \(\Col( A ) \text{,}\) which is equal to the set of all vectors that are linear combinations of the columns of \(A \)

\begin{equation*} \{ y ~\vert~ y = A x \}. \end{equation*} -

The null space, \(\Null( A ) \text{,}\) which is equal to the set of all vectors that are mapped to the zero vector by \(A \)

\begin{equation*} \{ x ~\vert~ A x = 0 \}. \end{equation*} -

The row space, \(\Rowspace( A ) \text{,}\) which is equal to the set

\begin{equation*} \{ y ~\vert~ y^H = x^H A \}. \end{equation*}Notice that \(\Rowspace( A ) = \Col( A^H ) \text{.}\)

-

The left null space, which is equal to the set of all vectors

\begin{equation*} \{ x ~\vert~ x^H A = 0 \}. \end{equation*}Notice that this set is equal to \(\Null( A^H ) \text{.}\)

Definition 4.2.1.1. Orthogonal subspaces.

Two subspaces \(S, T \subset \Cn \) are orthogonal if any two arbitrary vectors (and hence all vectors) \(x \in S \) and \(y \in T \) are orthogonal: \(x^H y = 0 \text{.}\)

The following exercises help you refresh your skills regarding these subspaces.

Homework 4.2.1.1.

Let \(A \in \C^{m \times n} \text{.}\) Show that its row space, \(\Rowspace( A ) \text{,}\) and null space, \(\Null( A ) \text{,}\) are orthogonal.

Pick arbitrary \(x \in \Rowspace( A ) \) and \(y \in \Null( A ) \text{.}\) We need to show that these two vectors are orthogonal. Then

Homework 4.2.1.2.

Let \(A \in \C^{m \times n} \text{.}\) Show that its column space, \(\Col( A ) \text{,}\) and left null space, \(\Null( A^H ) \text{,}\) are orthogonal.

Pick arbitrary \(x \in \Col( A ) \) and \(y \in \Null( A^H ) \text{.}\) Then

Homework 4.2.1.3.

Let \(\{ s_0 , \cdots , s_{r-1} \} \) be a basis for subspace \({\cal S} \subset \Cn \) and \(\{ t_0 , \cdots , t_{k-1} \} \) be a basis for subspace \({\cal T} \subset \Cn \text{.}\) Show that the following are equivalent statements:

Subspaces \({\cal S} , {\cal T} \) are orthogonal.

The vectors in \(\{ s_0 , \cdots , s_{r-1} \} \) are orthogonal to the vectors in \(\{ t_0 , \cdots , t_{k-1} \} \text{.}\)

\(s_i^H t_j = 0 \) for all \(0 \leq i \lt r \) and \(0 \leq j \lt k \text{.}\)

\(\left( \begin{array}{c | c | c } s_0 \amp \cdots \amp s_{r-1} \end{array} \right)^H \left( \begin{array}{c | c | c } t_0 \amp \cdots \amp t_{k-1} \end{array} \right) = 0,\) the zero matrix of appropriate size.

We are going to prove the equivalence of all the statements by showing that 1. implies 2., 2. implies 3., 3. implies 4., and 4. implies 1.

-

1. implies 2.

Subspaces \({\cal S} \) and \({\cal T} \) are orthogonal if any vectors \(x \in {\cal S} \) and \(y \in {\cal T} \)are orthogonal. Obviously, this means that \(s_i \) is orthogonal to \(t_j\) for \(0 \leq i \lt r \) and \(0 \leq j \lt k \text{.}\)

-

2. implies 3.

This is true by definition of what it means for two sets of vectors to be orthogonoal.

-

3. implies 4.

\begin{equation*} \left( \begin{array}{c | c | c } s_0 \amp \cdots \amp s_{r-1} \end{array} \right)^H \left( \begin{array}{c | c | c } t_0 \amp \cdots \amp t_{k-1} \end{array} \right) = \left( \begin{array}{c c c} s_0^H t_0 \amp s_0^H t_1 \amp \cdots \\ s_1^H t_0 \amp s_1^H t_1 \amp \cdots \\ \vdots \amp \vdots \amp \end{array} \right) \end{equation*} -

4. implies 1.

We need to show that if \(x \in {\cal S} \) and \(y \in {\cal T} \) then \(x^H y = 0 \text{.}\)

Notice that

\begin{equation*} x = \left( \begin{array}{c | c | c } s_0 \amp \cdots \amp s_{r-1} \end{array} \right) \left( \begin{array}{c} \widehat \chi_0 \\ \vdots \\ \widehat \chi_{r-1} \end{array} \right) \mbox{ and } y = \left( \begin{array}{c | c | c } t_0 \amp \cdots \amp t_{k-1} \end{array} \right) \left( \begin{array}{c} \widehat \psi_0 \\ \vdots \\ \widehat \psi_{k-1} \end{array} \right) \end{equation*}for appropriate choices of \(\widehat x \) and \(\widehat y \text{.}\) But then

\begin{equation*} \begin{array}{rcl} x^H y \amp = \amp \left( \left( \begin{array}{c | c | c } s_0 \amp \cdots \amp s_{r-1} \end{array} \right) \left( \begin{array}{c} \widehat \chi_0 \\ \vdots \\ \widehat \chi_{r-1} \end{array} \right) \right)^H \left( \begin{array}{c | c | c } t_0 \amp \cdots \amp t_{k-1} \end{array} \right) \left( \begin{array}{c} \widehat \psi_0 \\ \vdots \\ \widehat \psi_{k-1} \end{array} \right) \\ \amp = \amp \left( \begin{array}{c} \widehat \chi_0 \\ \vdots \\ \widehat \chi_{r-1} \end{array} \right)^H \begin{array}[t]{c} \underbrace{ \left( \begin{array}{c | c | c } s_0 \amp \cdots \amp s_{r-1} \end{array} \right)^H \left( \begin{array}{c | c | c } t_0 \amp \cdots \amp t_{k-1} \end{array} \right) } \\ 0_{r \times k} \end{array} \left( \begin{array}{c} \widehat \psi_0 \\ \vdots \\ \widehat \psi_{k-1} \end{array} \right) \\ \amp = \amp 0 \end{array} \end{equation*}

Homework 4.2.1.4.

Let \(A \in \C^{m \times n}\text{.}\) Show that any vector \(x \in \Cn \) can be written as \(x = x_r + x_n \text{,}\) where \(x_r \in \Rowspace( A ) \) and \(x_n \in \Null( A ) \text{,}\) and \(x_r^H x_n = 0 \text{.}\)

Let \(r \) be the rank of matrix \(A \text{.}\) In a basic linear algebra course you learned that then the dimension of the row space, \(\Rowspace( A ) \text{,}\) is \(r \) and the dimension of the null space, \(\Null( A ) \text{,}\) is \(n-r \text{.}\)

Let \(\{ w_0, \cdots , w_{r-1} \} \) be a basis for \(\Rowspace( A ) \) and \(\{ w_r, \cdots , w_{n-1} \} \) be a basis for \(\Null( A ) \text{.}\)

TRUE

Now prove it!

Let \(r \) be the rank of matrix \(A \text{.}\) In a basic linear algebra course you learned that then the dimension of the row space, \(\Rowspace( A ) \text{,}\) is \(r \) and the dimension of the null space, \(\Null( A ) \text{,}\) is \(n-r \text{.}\)

Let \(\{ w_0, \cdots , w_{r-1} \} \) be a basis for \(\Rowspace( A ) \) and \(\{ w_r, \cdots , w_{n-1} \} \) be a basis for \(\Null( A ) \text{.}\) Since we know that these two spaces are orthogonal, we know that \(\{ w_0, \cdots , w_{r-1} \} \) are orthogonal to \(\{ w_r, \cdots , w_{n-1} \} \text{.}\) Hence \(\{ w_0, \cdots , w_{n-1} \} \) are linearly independent and form a basis for \(\Cn \text{.}\) Thus, there exist coefficients \(\{ \alpha_0, \cdots , \alpha_{n-1} \} \) such that

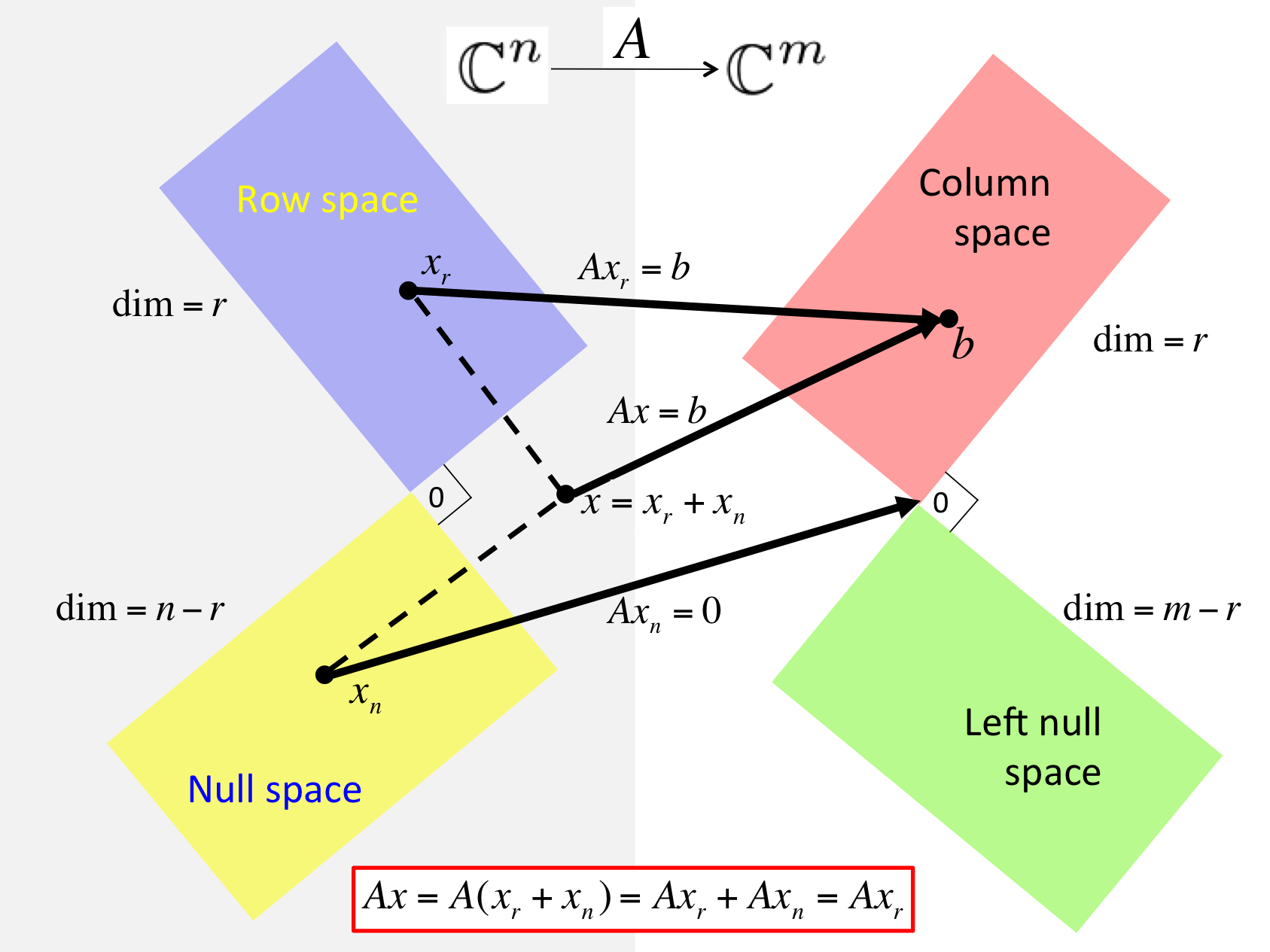

Figure 4.2.1.2 captures the insights so far.

That figure also captures that if \(r \) is the rank of matrix, then

\(\dim( \Rowspace( A ) ) = \dim( \Col( A ) ) = r \text{;}\)

\(\dim( \Null( A ) ) = n- r \text{;}\)

\(\dim( \Null( A^H ) ) = m-r \text{.}\)

Proving this is a bit cumbersome given the knowledge we have so far, but becomes very easy once we relate the various spaces to the SVD, in Subsection 4.3.1. So, we just state it for now.