Unit 2.1.3 What you will learn

¶In this week, we learn how to attain high performance for small matrices by exploiting instruction-level parallelism.

Upon completion of this week, we will be able to

Realize the limitations of simple implementations by comparing their performance to that of a high-performing reference implementation.

Recognize that improving performance greatly doesn't necessarily yield good performance.

Determine the theoretical peak performance of a processing core.

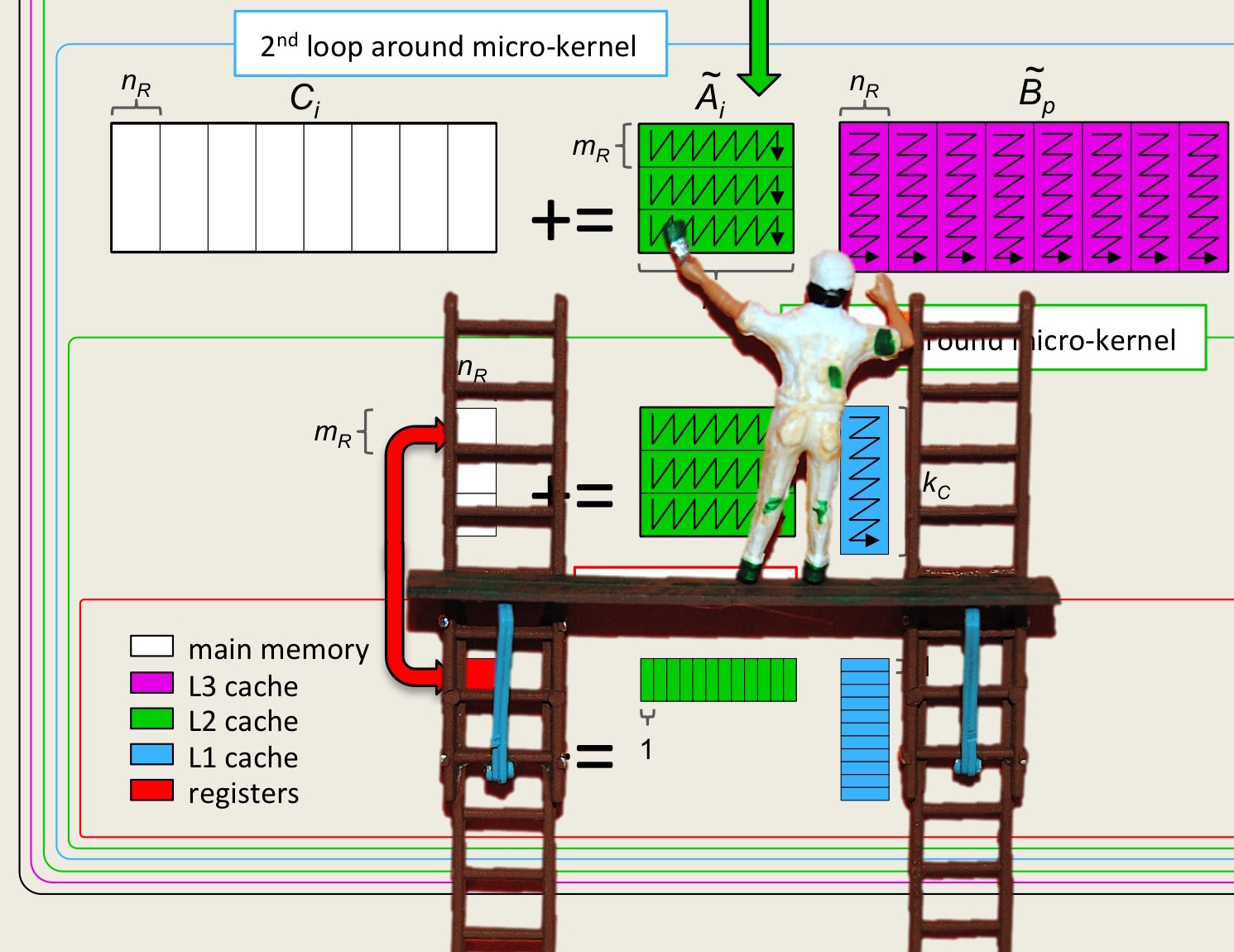

Orchestrate matrix-matrix multiplication in terms of computations with submatrices.

Block matrix-matrix multiplication for registers.

Analyze the cost of moving data between memory and registers.

Cast computation in terms of vector intrinsic functions that give access to vector registers and vector instructions.

Optimize the micro-kernel that will become our unit of computation for future optimizations.

The enrichments introduce us to

Theoretical lower bounds on how much data must be moved between memory and registers when executing a matrix-matrix multiplication.

Strassen's algorithm for matrix-matrix multiplication.