Unit 4.4.2 Ahmdahl's law

¶Ahmdahl's law is a simple observation about the limits on parallel speedup and efficiency. Consider an operation that requires sequential time

for computation. What if fraction \(f \) of this time is not parallelized (or cannot be parallelized), and the other fraction \((1-f)\) is parallelized with \(t \) threads. We can then write

and, assuming super-linear speedup is not expected, the total execution time with \(t \) threads, \(T_t \text{,}\) obeys the inequality

This, in turn, means that the speedup is bounded by

What we notice is that the maximal speedup is bounded by the inverse of the fraction of the execution time that is not parallelized. If, for example, \(1/5 \) the code is not optimized, then no matter how many threads you use, you can at best compute the result \(1 / ( 1/ 5 ) = 5 \) times faster. This means that one must think about parallelizing all parts of a code.

Remark 4.4.3.

Ahmdahl's law says that if one does not parallelize a part of the sequential code in which fraction \(f \) time is spent, then the speedup attained regardless of how many threads are used is bounded by \(1/f \text{.}\)

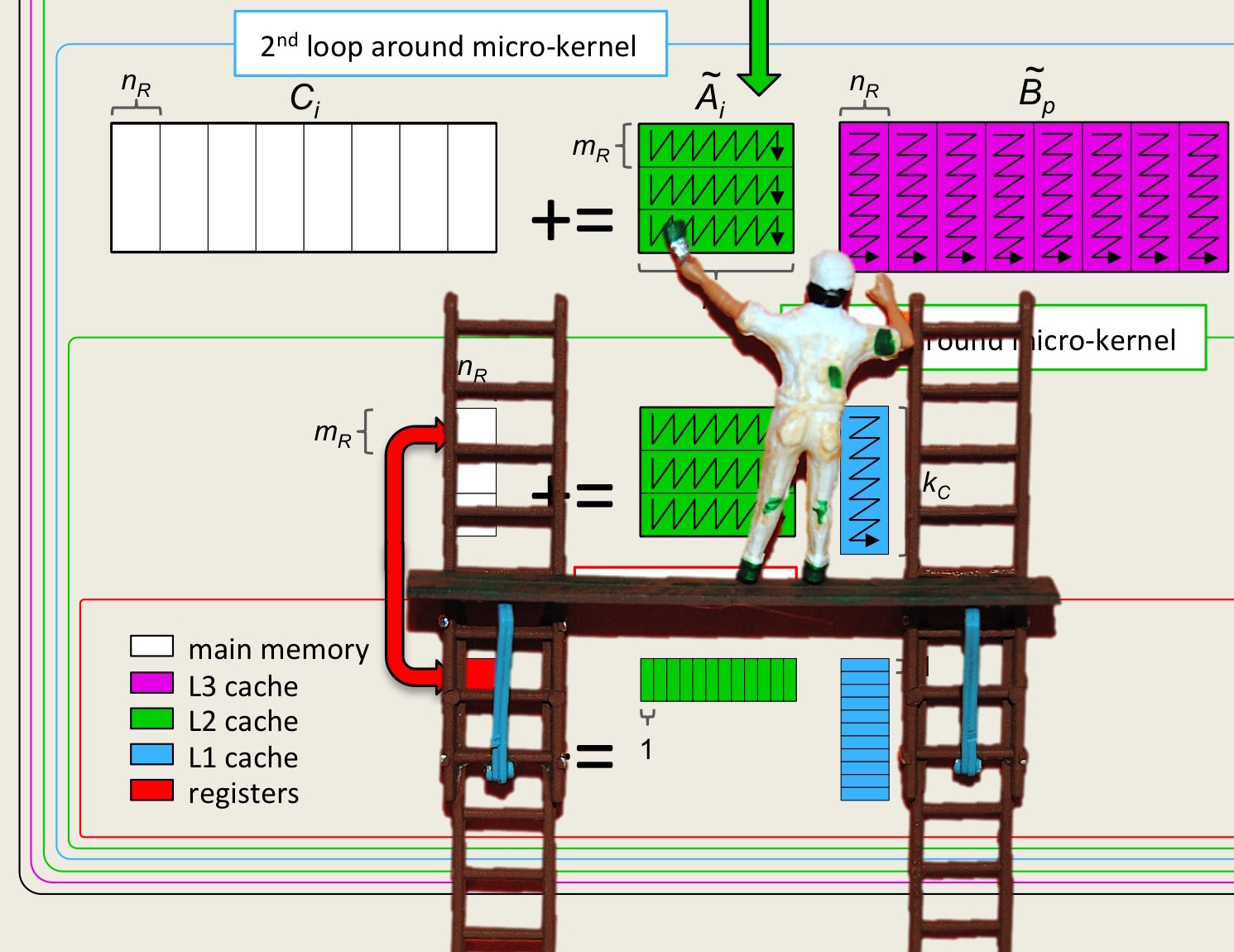

The point is: so far, we have focused on parallelizing the computational part of matrix-matrix multiplication. We should also parallelize the packing, since it requires a nontrivial part of the total execution time.

For parallelizing matrix-matrix multiplication, there is some hope. In our situation, the execution time is a function of the problem size, which we can model by the computation time plus overhead. A high-performance implementation may have a cost function that looks something like

where \(\gamma \) is the time required for executing a floating point operation, \(\beta \) is some constant related to the time required to move a floating point number, and \(C \) is some other constant. (Notice that we have not done a thorough analysis of the cost of the final implementation in Week 3, so this formula is for illustrative purposes.) If now the computation is parallelized perfectly among the threads and, in a pessimistic situation, the time related to moving data is not at all, then we get that

so that the speedup is given by

and the efficiency is given by

Now, if \(t \) is also fixed, then the expressions in parentheses are constants and, as \(n \) gets large, the first term converges to \(1 \) while the second term converges to \(0 \text{.}\) So, as the problem size gets large, the efficiency starts approaching 100%.

What this means is that whether all or part of the overhead can also be parallelized affects how fast we start seeing high efficiency rather than whether we eventually attain high efficiency for a large enough problem. Of course, there is a limit to how large of a problem we can store and/or how large of a problem we actually want to compute.