UT College of Natural Sciences News | Esther R Robards-Forbes

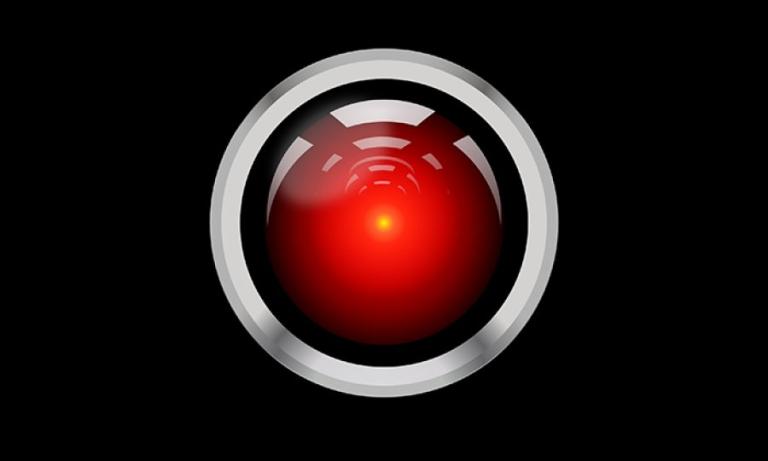

Tuesday marks the 50th anniversary of "2001: A Space Odyssey." The groundbreaking science-fiction film earned an Academy Award for Best Visual Effects and appears on several of the American Film Institute's Top 100 lists. But what many remember best about the movie is HAL 9000, the murderous artificial intelligence aboard the spaceship that has been ranked cinema's 13th best villain.

As one of fiction's most famous AI agents turns 50, we sat down with artificial intelligence expert and assistant professor of computer science Scott Niekum to find out how well the film holds up to the real thing. The conversation below has been edited for space.

When I think of artificial intelligence, HAL 9000 is one of the first things I think of. Is that a problem?

I think AI definitely has a PR problem in some ways. The first thing people think of is the Terminator or Hal 9000—rather than Baymax from "Big Hero 6." I'm actually really glad that film got made because it does present this really hopeful future for AI and robots helping humanity.

There are always doomsayers about any new technology, but AI is something that is particularly scary to a lot of people because the public perception is inaccurate. People think what AI and machine learning researchers are doing is recreating human intelligence and deploying it onto problems, and that's not what is happening.

Let's set the record straight. What is AI really?

At least right now in AI, we are not recreating some generalized intelligence that we just apply to a whole lot of problems. What we do is we build algorithms that are targeted to one very specific problem and that is the only thing they do, like controlling an elevator, for example. A system like HAL 9000 or the Terminator can only exist and be dangerous if it's a general intelligence that can do and learn anything. In the elevator-control example, it can learn how to be better at its job, but it can't learn about the outside world. It's simply not capable of that. And, it's important to remember, there is a lot of work happening right now in machine learning that is based around safety. We want to make sure that when systems are self-improving they are not going to do something that is either incorrect or dangerous.

Early on in the film, HAL states that he is foolproof and incapable of error. Can an AI ever be totally foolproof?

It depends on how you define foolproof and error. One example that people talk about a lot now is self-driving cars. We would love to have self-driving cars that will never be in an accident. But that can't happen. You can't prevent a deer from jumping on top of the car. There are factors in the real world that you just can't control. We can't individually control every element of the world. We can really only optimize, not become perfect. …

One thing that I'm working on currently with one of my students, Daniel Brown, is this idea that, if we want to get robots out in the world in people's homes and workplaces, we can't have an expert like me or one of my students program them for every home they'd be in. It would be great if we could stick a robot out there, and the robot could just watch someone do something once or twice and now it knows how to do that thing. We work on building algorithms that can do that. But what does it mean to get the gist of something? If I show a couple of demonstrations of a task to the robot, what would it take for it to be able to say, "OK, I've seen enough demos. I get what you're showing me," or "I think I need a few more."

It really gets at the heart of what it means to understand something.

Is it important to put constraints around AI? For example, it seems like HAL was able to lie, manipulate and preserve itself over people. Maybe the programmers could have prevented that?

People are worried about AI being designed poorly and getting out of control, but if you design a bridge poorly, it's also going to get out of control. This happens across all engineering, and I don't see AI as any different.

Because it involves these processes, like learning, that are easily anthropomorphized, we project all kinds of human things on AI. It's not just lay people. This is a big problem in science, too. When a system performs well or performs in a certain way, it's super easy for us as scientists to project things like understanding on to it, when sometimes there is a much simpler explanation.

In the film, when Dave, the mission commander, goes to deactivate HAL, the AI pleads with him not to deactivate, saying, "I'm afraid, Dave." What are the ethics of destroying something that has expressed an emotion?

It's not alive. It's not human. It doesn't have biological drives or emotions like we do. We still have a very poor understanding of consciousness, but we still don't have AI systems, in my opinion, that are as intelligent as even ants. In robotics, we can't reliably pick up a can of Coke.

Even if it is easy to anthropomorphize, even if they do become more intelligent, they are just built systems. I don't think there is any ethical problem there.

HAL was, according to the film, born in 1992. Remembering my first Apple Macintosh that I had in 1992, I'm guessing we were not anywhere near that capability at that time. Is something even remotely like HAL possible now, or how far off are we?

I think the closest thing we have to HAL right now are personal assistants like Siri and Alexa. If you've used them, you know they are OK. They are not ideal. They're not going to be running a spaceship any time soon.

People are very afraid of AI and yet it is all around us in ways that have become semitransparent, like Google Maps, and people aren't freaked out about those. The more commonplace things become that are AI, they stop being called AI. The definition of AI just keeps getting pushed back.

How realistic is an agent like HAL?

In principle, there is nothing preventing the creation of human-level intelligence in a computer. But would it behave that way? Not if it was engineered well.

There's all sorts of good questions to ask about AI—Is it going to be used ethically in warfare, for example?—but those questions are mostly about how humans are going to use AI, rather than the technology taking off and doing something dangerous on its own.

What's the most realistic depiction of AI that you've seen in pop culture or film?

I felt like "Her" was actually quite good. It wasn't a robot or an incarnated thing, it was just this Siri-like thing, but to the next level, and it didn't seem so ridiculous.

A much further looking thing, but something where I thought they didn't screw up everything was "Westworld." They did a pretty reasonable job at depicting what future intelligent, self-preserving-but-restricted agents might look like. There are basically just a bunch of knobs you can turn to adjust agent aggression and intelligence, and you know when you turn the knob the wrong way bad things happen. I thought that was a reasonable depiction, but one that is very far in the future and not resembling at all what things are like now or where they necessarily are going to go.