Floorplans are used in many industries to help people visualize what the inside of a building looks like without actually seeing it. Traditionally, floorplans have been created by actually observing a 3D environment either manually or with the aid of 3D sensors. But what happens when the luxury of observing the 3D environment isn’t available—for example, when a robot is introduced to a new environment? Would it be able to quickly create floor maps without actually seeing the entire environment being mapped in detail?

This is the exact question UT Computer Science Professor Kristen Grauman in conjunction with CMU PhD student Senthil Purushwalkam, among other researchers, explore how sound together with sight can be used to create accurate 2D floor plans without physically seeing the entire environment in “Audio-Visual Floorplan Reconstruction.” It aims to show how their newly introduced machine learning model, called AV-Map, can create accurate floor maps from a short video.

Professor Grauman’s research focuses on computer vision and machine learning, specializing in visual recognition and embodied perception. This background led her to be involved in research to determine how sound and sight could be used to interpret the 3D world.

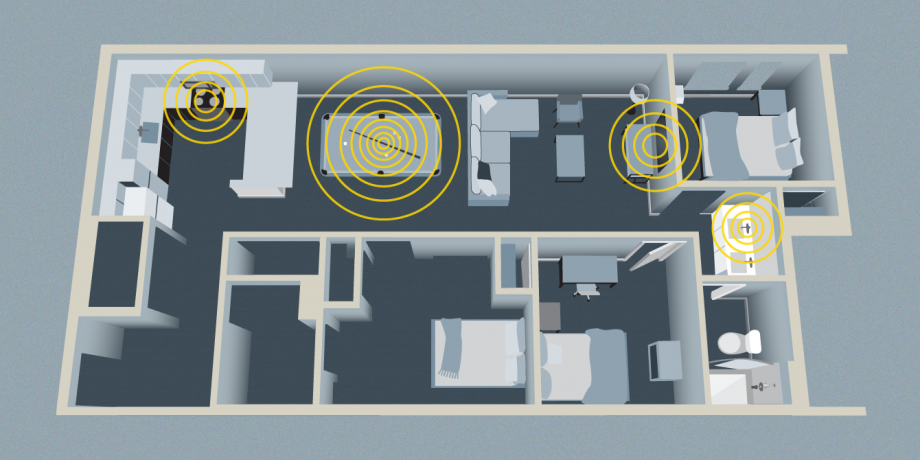

The AV-Map model brings together both sensing modalities to rapidly infer even parts of the environment not visible to the camera. This model is able to work because observed sound is inherently driven by geometry. As audio bounces around in a room it reveals the different shapes and objects in the room, even those that can’t be directly seen. Additionally, the research shows how sound can also be used to reveal possible room layouts, for instance, the sound of a shower indicates a bathroom. The AV-Map model utilizes both passive environment-generated sound and active sound emitted by the camera to generate a floor plan. As an agent moves throughout an environment, it emits a sound that the model uses to estimate room layout as the sound bounces off walls.

Based on results from 85 real-world multi-room environments, the AV-Map model consistently outperformed vision-based mapping. With just a few glimpses spanning 26% of an area, the model can estimate a floor plan layout with 66% accuracy.

This type of technology has immense implications for the future of robotics. In certain situations, robots do not have the luxury of scouring a certain area to figure out the layout, the introduction of the AV model would give robots the ability to quickly survey their environment and figure out how to move around.

The potential applications for the AV model are not limited to Robotics, however. “We come at it from the field of computer vision,” Dr.Grauman says, “and finding ways to advance what can be done technically.”