Digital media is one of the best ways to engage with new communities, where each click takes you to new, engaging platforms like TikTok, Instagram Reels, and YouTube Shorts. This content is enhanced when you consider the intricacies of webcam visuals and overlays making it a really immersive experience. Now, imagine this experience if you’re unable to see the video. For people with visual impairments, accessing this content comes with many challenges. These platforms currently lack effective solutions to bridge the accessibility gap for the blind and low vision (BLV) community. This results in significant accessibility barriers, leaving BLV users unable to fully engage with the content that others can enjoy seamlessly.

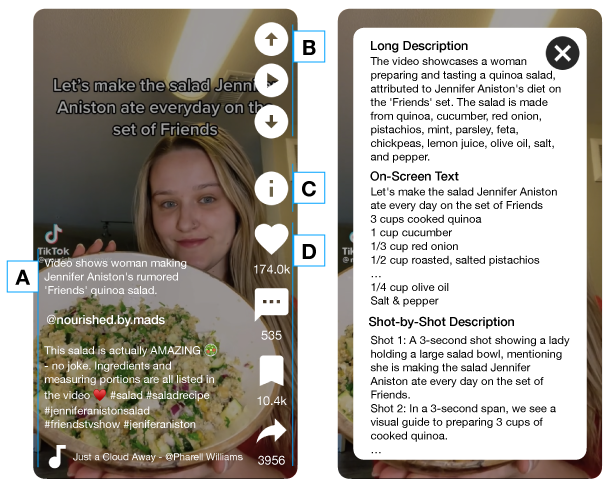

ShortScribe is an advanced technology that makes video content accessible by transforming visual elements into audio descriptions. The process begins by breaking down videos into discrete segments or "shots," each representing a specific scene. Using vision language models like BLIP-2 and OCR (Optical Character Recognition), ShortScribe analyzes and interprets the visual information in each shot. It then employs GPT-4, a sophisticated large language model, to generate detailed textual descriptions of the visuals. These descriptions are provided in various levels of detail, allowing BLV users to either get a general overview or access more detailed descriptions based on their needs. This hierarchical approach to audio descriptions bridges the accessibility gap in digital media by enabling BLV users to understand and enjoy video content, providing them the flexibility to customize their viewing experience.

Working on these advancements, Dr. Amy Pavel, Tess Van Daele, Akhil Iyer, Jalyn C. Derry, and Mina Huh from UT Austin, along with Yuning Zhang from Cornell, underscore the growing need for accessible video content. As short-form videos gain popularity on social media, the impact of AI in addressing accessibility issues will become increasingly critical to ensure these platforms are accessible to everyone, including those with visual impairments. The ShortScribe system enhances the viewing experience for BLV users while also setting a precedent for how technology can be harnessed to overcome accessibility barriers in media consumption.

This work not only contributes to the field of human-computer interaction and accessibility but also paves the way for further innovations in video accessibility. As we move forward, researchers, platform developers, and content creators are collaborating to improve accessibility as video content expands. These advancements help ensure the digital world is inclusive for everyone.