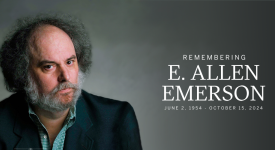

Remembering Turing Award Winner E. Allen Emerson

10/18/2024 - UT Computer Science mourns the loss of Professor Emeritus E. Allen Emerson, winner of the most prestigious award in computer science and a faculty member in the Department of Computer Science at The University of Texas at Austin since the early 1980s, who died on October 15 after an extended illness."I'm very sad to learn of Allen's death, he was a good friend and a great scientist,” said Don Fussell, Chair of the Department of Computer Science. “His work was a huge step forward in the development of tools that help designers create systems with known, verifiable properties."